-

Meaning, It's Just Semantics

Semantics, the computability of meaning.

We know the importance of meaning when communicating with others. We carefully choose our words when communicating business concepts with coworkers, customers, partners, consultants. However, we often do not consider assuring the computer understands the meaning of our data when running a data mining or AI project.

Without meaning from the start, there is little chance of understanding the result.

Most of us know the well-worn adage, Garbage In, Garbage Out. Without meaning, data is not far from being garbage from the computer's point of view. Seeing only our raw data, we reduce the power of machine learning and AI to simple pattern recognition. We can keep adding data, but the AI can't go much further.

Extending the adage, Meaning In, Meaning Out, enables our data mining projects to base their models on business concepts. Further, they are explainable in business terms.

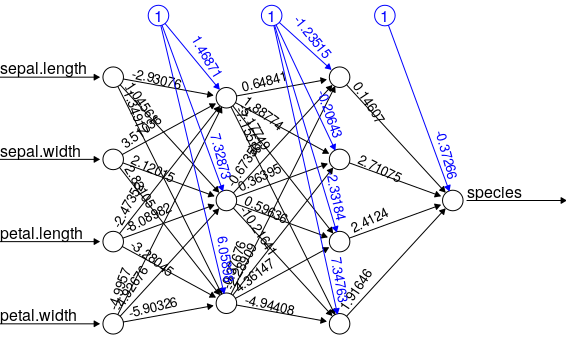

What do we mean by explainable? Consider the following depiction of a simple neural network:

The model shows what a neural network "learned" using some data about flowers. The model can differentiate between a couple of species of iris, but how it is making that decision is opaque; the definition of the model is not one we can understand.Now consider a similar model making decisions impacting your business. Even if the conclusions are correct, you'd like to understand them and even learn from them. In some cases, it might become necessary to explain them.

Our focus is tying meaning to your data. This approach allows your machine learning and analytic models to be expressed using business terminology rather than purely mathematical values like those in the image above.

But, your data has meaning! We often confuse our understanding of the data with how the computer "sees" it. Our systems present data in ways meaningful to us. When we view our data, we understand its meaning. However, we are determining the meaning through our interpretation. Without a formal machine-readable approach to include meaning in our data, our understanding is invisible to the computer and cannot inform any data mining process or resulting models.

-

Better Tooling

Here is a brief overview of several technologies we combine to create meaningful data models.

Graph databases are the perfect environment when you have disparate data sources, formats, and schemas. Evolving data models do not require a reworking of graph databases. This flexibility is quite different from the structure inherent in relational and document data stores. Although these platforms are well suited to manage our enterprise data, they are less effective when flexibility for data mining projects is needed. When building machine learning models that incorporate meaning, a graph structure simplifies and speeds the data aggregation process.

The addition of semantics gives us a powerful ally in the form of meaning and logic available within our database. Semantic graphs make connecting data between different systems a no-risk scenario. There is no heavyweight refactoring to do. The relationships in the data, supporting meaning, exist as data assertions rather than database structures. If we need to change a definition, we remove the asserted connection: no harm, no foul, and no time spent creating scripts to reload and retranslate data.

Combining these features with a machine learning environment (a common AI approach) moves AI beyond its limited "learning" abilities to a platform that recognizes concepts, not just patterns. This capability is instrumental as a foundation for understandable and explainable computer-based intelligence. Whether cybersecurity, environmental science, healthcare, or other businesses which seek to extract knowledge from data, models based on concepts deliver immediate value.