The Cognitive Corporation™ – Effective BPM Requires Data Analytics

The Cognitive Corporation™ is a framework introduced in an earlier posting. The framework is meant to outline a set of general capabilities that work together in order to support a growing and thinking organization. For this post I will drill into one of the least mature of those capabilities in terms of enterprise solution adoption – Learn.

Business rules, decision engines, BPM, complex event processing (CEP), these all invoke images of computers making speedy decisions to the benefit of our businesses. The infrastructure, technologies and software that provide these solutions (SOA, XML schemas, rule engines, workflow engines, etc.) support the decision automation process. However, they don’t know what decisions to make.

The BPM-related components we acquire provide the how of decision making (send an email, route a claim, suggest an offer). Learning, supported by data analytics, provides a powerful path to the what and why of automated decisions (send this email to that person because they are at risk of defecting, route this claim to that underwriter because it looks suspicious, suggest this product to that customer because they appear to be buying these types of items).

I’ll start by outlining the high level journey from data to rules and the cyclic nature of that journey. Data leads to rules, rules beget responses, responses manifest as more data, new data leads to new rules, and so on. Therefore, the journey does not end with the definition of a set of processes and rules. This link between updated data and the determination of new processes and rules is the essence of any learning process, providing a key function for the cognitive corporation.

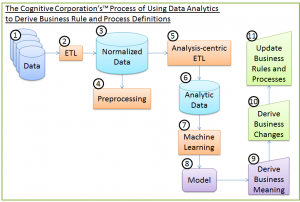

The following image depicts the overall process of using data analytics to take corporate information and derive new business processes and rules, hence learning. I will refer to the numbered items throughout this post.

Data Analytics is a general term and there are many subtleties within such a broad field. In this case I’m focused on the machine (computer) learning aspect of analytics. I’m skipping past the need to identify key data sources, create canonical definitions, and normalize information. Overused, yet accurate, the phrase, “Garbage In, Garbage Out” applies to data analytics as much as any other aspect of computing. The relevant data must be effectively organized before proceeding (in the diagram this is depicted as items 1, 2 and 3).

What is machine learning? It is a way that computers can be used to look at data and find patterns that we don’t realize exist. There are a variety of algorithms that allow computers to be used for this purpose. Some are very difficult to understand and some are quite simple. They each have strengths and weaknesses. Not all work well for a given type of data or analytics.

Therefore, the first step for leveraging data analytics (on clean data, of course) is to understand the computer’s perception of that data at a high-level. This is known as data preprocessing and contains within it tasks that are necessary in order to find actionable information within the data (diagram item 4). The tasks can be outlined as: Aggregation, Sampling, Dimensionality Reduction, Feature Subset Selection, Feature Creation, Discretization, and Feature Transformation. I will explore each of these tasks in future postings.

Once the data is understood and we know how to structure it for automated analytics (diagram items 5 and 6), we need to run it through machine learning algorithms (diagram item 7). These are the secret sauce of many data analytics tools. The algorithms involve a variety of mathematical approaches to identifying relationships within the data. What isn’t always easy to understand is why the analytics are identifying certain relationships. Some algorithms make this harder than others to discern. As with data preprocessing’s steps, I’ll delve into these algorithms in future posts.

Fundamentally, the output from this type of tool is a predictive model (diagram item 8) that can take new data and predict the outcomes. Assuming we have focused on something pertinent to our operations, retaining customers perhaps, then we want the computer to find a way to predict which customers are likely to defect. If such a model is tested and found to provide accurate results then we are confident that within its black box it “knows” something about our defecting clients that we might be able to use in order to improve retention.

It is the next step, being able to use the model to derive a business rule change, which requires human intervention. The task is to root out the underlying cause(s), which the computer has found, in a way that we are able to understand (diagram item 9) and, more importantly, that we may may act upon (diagram item 10). This is the crux of leveraging data analytics to improve business operations.

To successfully leverage the data analytics findings (items 9 and 10) a business needs three things. First, it must have a formal understanding of the processes used within the analytic environment. Experts must be able to translate the predictive model back to the actual source data that is pertinent to the predictions.

Second, there must be an open and collaborative environment that accepts unanticipated data relationships, working to define them in business terms – not looking to dispute or discredit them. Often, people who “know the business” cannot accept what the data analytics results are showing. The findings, often by definition, are at odds with commonly accepted “truths.” This is part of the power of using these tools, they aren’t beholden to our mental baggage and, by design, they think outside of our business-knowledge-based box.

Third, the business must have an agile environment that can make changes to the underlying processes and rules culled from the model. This permits the patterns and causes found by the data analytics tool to manifest as timely action within the business’ operation. Therefore, there must be business leaders empowered to change business processes and rules, as well as an IT infrastructure that supports implementing rapid rule and process changes (diagram step 11).

The faster an organization can take data analytics results and update business operations (rules and processes), the better its ability to respond to changing business environments. Such a capability provides a key differentiator for any business. More and more companies are finding themselves competing in ever more commoditized markets. It is an organization’s unique ability to use automation as a way to quickly personalize and flex to specific situations that allows it to be more productive, more responsive and ultimately more successful.

This process is not simple nor is it completely automatic. It consistently requires manual effort and tuning. Machines don’t do the thinking, they simply provide leaders with actionable information by which conclusions may be drawn and decisions may be made.

Some business use cases for data analytics tools are easier to implement than others. For instance, predictive models around actuarial data lead to scoring rules in a business rule environment fairly directly. The relationships between buying patterns and social media and their impact on business processes and rules are not as straightforward to map. The rewards, however, are meaningful and worthwhile as long as the correct data is being used to drive the correct decisions.

If you are exploring data analytics as an input to business rule and process improvements I’d be interested in hearing about your experiences and thoughts. Also, as I mentioned at the beginning, this post is meant to introduce the high-level concepts. Future postings will dive deeper into the individual processes, tools and techniques that were touched upon.

Tags: business intelligence, business rules, cognitive corporation, data, data analytics, Information Systems, linkedin, machine learning