Archive for the ‘Data Analytics’ Category

Thursday, June 10th, 2021

For background on this post, please see my last entry, Part 1: Questions and Baselining.

What separates today’s machine learning from human learning? One word: concepts.

“How so?” you might ask. To see what I mean, let’s start by looking at standard machine learning inputs and outputs. I’ll focus on supervised learning.

Supervised Machine Learning

Supervised machine learning is an approach where we start with a set of records. In each record, one field contains the correct answer, known as the target attribute. The other fields in the record contain related information, formally known as descriptive attributes. For example, we might have a set of measurements for flower petals and the flower’s name for each set of measures. We want the computer to learn how to identify different types of flowers. For those of you with machine learning experience, you’ll recognize the Iris data set as the inspiration for my example.

Supervised machine learning is similar to how we might teach children some set of math facts. We give them many examples of addition problems and answers. Over time we would like them to understand the mechanics of addition and solve novel problems. We have a similar goal with supervised learning. We want to give the computer lots of examples with the correct answers and have it figure out how to answer new problems.

Decision Trees

| Table 1: Flower Data |

| Petal Length |

Petal Width |

Flower (Answer) |

| 2 |

1.7 |

Rose |

| 2.5 |

2.1 |

Rose |

| 3.2 |

0.5 |

Daisy |

| 3.6 |

0.6 |

Daisy |

|

Figure 1: Example Decision Tree

|

We’ll begin looking at what the machine is learning using a basic supervised approach, decision trees. In this case, the computer looks at the correct answer, the target attribute. It uses the descriptive attributes in the record to create a decision that would use that record’s data to arrive at the correct result. In Table 1, there are four records. For each, there are two measurements for rose petals and daisy petals. The resulting decision tree might look like Figure 1.

This is a simple example, but the interesting point is that the computer is limited to making a decision using the data in the record. As discussed in my previous post, the text “Rose” doesn’t mean anything to the system. We could add additional data to the record, such as details about petal color and whether the stem has thorns. But the machine learning process won’t have that information available without explicitly adding it to the data. Since the computer doesn’t know what the text “Rose” means, it can’t incorporate other knowledge about roses into its decision tree.

This is a considerable hurdle in machine learning. As people learn new information, they build a knowledge base and apply it to new learning. That isn’t how these discreet learning processes work. And that limitation is imposed chiefly because the computer isn’t using concepts.

(more…)

Tags: AI, artificial intelligence, business intelligence, data mining, machine learning, semantics

Posted in Artificial Intelligence (AI), Data Analytics, Data Mining, infuzIT, Intelligent Business Systems, Machine Learning, Semantic Technology | No Comments »

Monday, May 17th, 2021

In this series of posts, I’m delving into the limitations of machine learning and AI, hamstrung by current techniques, while considering technologies and practices to transform business intelligence efforts beyond the status quo.

Question of Intelligence

What is intelligence? What underlies intelligence? What aspects of intelligence do we want machine learning to demonstrate? What is artificial intelligence as opposed to intelligence? What capabilities does a computer need to achieve intelligence? Can programs be written to derive intelligence within a modern computer?

Questions delving into intelligent systems go on and on. I’m going to spend a few blog entries exploring machine learning and our quest to create and benefit from intelligent computer systems. Through this discussion, I’ll explore these questions.

Framing the Discussion

Note that my focus is business automation, what are organizations seeking to gain from machine learning and intelligent systems. I am purposefully avoiding a philosophical discussion of intelligence. To that end, a primary assumption is that we are interested in applying human-style intelligence to advance business or operational success. Put another way, animals and plants demonstrate intelligence of differing types; however, mimicking these is not an organization’s goal when employing machine learning.

Key Terms

To begin, I need working definitions for learning and intelligence. These will serve as touchstones for exploring computer-based learning and intelligence. Merriam-Webster’s dictionary provides helpful initial entries for each. The definition for “learn” is “to gain knowledge or understanding of or skill in by study, instruction, or experience.” While “Intelligence” is defined in two parts, “the ability to learn or understand or to deal with new or trying situations” and “the ability to apply knowledge to manipulate one’s environment or to think abstractly as measured by objective criteria (such as tests).”

The terms knowledge and understanding appear in both definitions and are vital to successful machine learning applications. Knowledge and understanding are based on information.

(more…)

Tags: AI, artificial intelligence, business intelligence, data mining, machine learning, semantics

Posted in Artificial Intelligence (AI), Data Analytics, Data Mining, infuzIT, Intelligent Business Systems, Machine Learning, Semantic Technology | No Comments »

Monday, June 8th, 2015

CMS has published a final rule (http://federalregister.gov/a/2015-14005) focused on changes to the Medicare Shared Savings Program (MSSP) which impacts Accountable Care Organizations (ACO) significantly. There are a variety of interesting changes being made to the program. For this discussion I’m looking at CMS’ continual drive toward data use and integration as a basis for improving quality of care, gaining efficiency and cutting costs in health care. One way this drive is manifested in the new rule regards an ACO’s plans as related to “enabling technologies,” which is an umbrella term for leveraging electronic data.

CMS has published a final rule (http://federalregister.gov/a/2015-14005) focused on changes to the Medicare Shared Savings Program (MSSP) which impacts Accountable Care Organizations (ACO) significantly. There are a variety of interesting changes being made to the program. For this discussion I’m looking at CMS’ continual drive toward data use and integration as a basis for improving quality of care, gaining efficiency and cutting costs in health care. One way this drive is manifested in the new rule regards an ACO’s plans as related to “enabling technologies,” which is an umbrella term for leveraging electronic data.

As background, Subpart B (425.100 to 425.114) of the MSSP describes ACO eligibility requirements. Two of the changes in this section clearly underscore the importance of electronic data and data integration to the fundamental operation of an ACO. Specifically, looking at page 127, the following updates are being made to section 425.112(b)(4) (emphasis mine):

Therefore, we proposed to add a new requirement to the eligibility requirements under § 425.112(b)(4)(ii)(C) which would require an ACO to describe in its application how it will encourage and promote the use of enabling technologies for improving care coordination for beneficiaries. Such enabling technologies and services may include electronic health records and other health IT tools (such as population health management and data aggregation and analytic tools), telehealth services (including remote patient monitoring), health information exchange services, or other electronic tools to engage patients in their care.

It goes on to add:

Finally, we proposed to add a provision under § 425.112(b)(4)(ii)(E) to require that an ACO define and submit major milestones or performance targets it will use in each performance year to assess the progress of its ACO participants in implementing the elements required under § 425.112(b)(4). For instance, providers would be required to submit milestones and targets such as: projected dates for implementation of an electronic quality reporting infrastructure for participants;

It is clear from the first change that an ACO must have a documented plan in place for continually expanding its use of electronic data and providing data visibility and integration between itself and its beneficiaries and providers. This is a tall order. The number of different systems and data formats along with myriad reporting and analytic platforms makes a traditional integration approach tedious at best and a significant business risk at worst.

The second change, keeping CMS apprised of the progress of data-centric projects, is clearly intended to keep the attention on these data publishing and integration projects. It won’t be enough to have a well-articulated plan, the ACO must be able to demonstrate progress on a regular basis.

(more…)

Tags: Centers for Medicare and Medicaid Services (CMS), data, healthcare, Information Systems, lightweight data federation, medicare, semantics

Posted in Agile Data Integration, Data Analytics, Healthcare Plan, Information Systems, Medicare, NoSQL, Semantic Technology | No Comments »

Friday, May 15th, 2015

I’m looking forward to being one of the presenters for infuzIT’s hands-on data integration and analysis workshop at this year’s SmartData Conference in San Jose. Giving people the opportunity to see the amazing power of semantics combined with NoSQL to quickly integrate and analyze data makes my day.

I’m looking forward to being one of the presenters for infuzIT’s hands-on data integration and analysis workshop at this year’s SmartData Conference in San Jose. Giving people the opportunity to see the amazing power of semantics combined with NoSQL to quickly integrate and analyze data makes my day.

My background includes significant work with data, both as an application developer and data warehouse architect. The acceleration of data-centric hardware and software capabilities over the past 10 years now supports a very different paradigm for exploring, reporting and analyzing data. Processes and procedures for creating a data warehouse or mart, the accepted rules of the road for creating integrated data repositories, are no longer clear cut. The data federation debate is no longer Inmon or Kimball.

A significant shift in data integration revolves around the required lifespan of the integrated data. This lifespan has two key aspects whose evolution now allows us to rethink our approach to data federation. This permits us to be much more agile when bringing heterogeneous data sources together. The two aspects are reflected in these design questions: 1) what data, if any, will be rehosted; and 2) what relationships will be supported within the integrated data?

Rehosting Data

In a traditional data warehouse the data must be rehosted. The new repository is the target where transformed data (cleaned-up, standardized) exists. The queries that will be retrieving data from multiple sources are really pulling data from a single source that has been populated from multiple sources. It represents a heavyweight process, driven by Extract-Transform-Load (ETL) scripts and requiring space to host redundant information.

Relationships Between Data Elements

The target warehouse schema determines what relationships are defined between the data elements being combined. Getting this “right” requires careful planning and coordination between the various groups that will use the warehouse. Given the significant effort, represented as cost, organizations tend to design data warehouses to support broad constituencies as a way to amortize the investment across departments and projects.

Paradigm Shift

Semantics and NoSQL allow us to reduce the effort of integrating data by orders of magnitude. They support a completely different mindset for bringing data together. Instead of carefully designing a model that works well in the general sense (reducing the value in specific cases) we have environments that allow us to experiment, adjust and focus on each case.

Below are several drivers which allow us to approach data federation differently using semantics and NoSQL.

Tags: data, data integration, lightweight data federation, NoSQL, semantics, workshop

Posted in Agile Data Integration, Architecture, Data, Data Analytics, infuzIT, NoSQL, Semantic Technology | No Comments »

Thursday, May 22nd, 2014

This week I had the opportunity to attend the Medicaid Managed Care Congress (MMCC) in Baltimore, MD and the privilege of speaking with a variety of leaders from provider, payer, and services organizations. With me from Blue Slate Solutions were Scott Van Buren and Chris Garber. A common theme we heard as we spoke with the attendees was the challenge of bringing data together from multiple sources and making sense of that information.

Medicaid is potentially the most complex government program that exists in the United States. There are federal and state aspects as well as portions that are handled at a local level. Some funding and services are defined as required while others are optional. The financial models’ formulas involve many variables. In short, there are numerous challenges in Medicaid, including the dual eligible changes that seek to address the services disconnects that often exist when a person is eligible for both Medicare and Medicaid.

Combining data from providers, payers, patients, government entities and the community are all necessary in order to optimize the quality of care that is provided to each patient. The definition of provider continues to expand, covering not just the medical needs of a person but incorporating the various social services, so important to the holistic care of an individual, under the umbrella of “provider.”

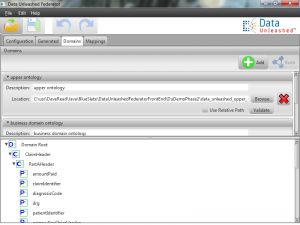

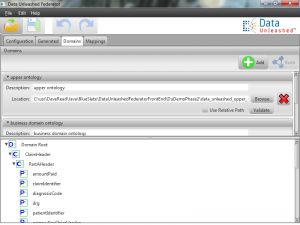

As we listened to people and talked about their data challenges we were also able to walk them through the Data Unleashed™ approach. The iterative learn-as-you-go process resonated across the board, whether people represented patient advocacy groups, provider organizations or healthcare plans. The capability to start small, obtain value quickly and adapt rapidly to changing environments fits the Medicaid complexities well.

If you would like to learn more about our agile and lightweight approach to accessing data from across your enterprise in order to quickly begin creating meaningful reporting and analytics, please check out dataunleashed.com for descriptions, videos and case studies. We’d also appreciate the opportunity to host a webinar with your team where we can explore Data Unleashed™ in more depth and discuss your specific data challenges.

Tags: analytics, data, enterprise systems, Information Systems, lightweight data federation, ontology, Public Data, reporting, semantics, system integration

Posted in Data, Data Analytics, Data Unleashed, Medicaid, Semantic Technology, Tools and Applications | No Comments »

Thursday, May 15th, 2014

For those of you spending time in Baltimore next week (May 19-21, 2014) to attend the Medicaid Managed Care Congress please stop by Blue Slate’s booth. Our MINI road trip begins Sunday as we head for Camden Yards and the beautiful inner harbor area. Our goal in attending? Having the opportunity to speak with you about your data challenges as well as your Medicaid journey.

For those of you spending time in Baltimore next week (May 19-21, 2014) to attend the Medicaid Managed Care Congress please stop by Blue Slate’s booth. Our MINI road trip begins Sunday as we head for Camden Yards and the beautiful inner harbor area. Our goal in attending? Having the opportunity to speak with you about your data challenges as well as your Medicaid journey.

We will be demonstrating what we mean by lightweight data federation and agile analytics as the drivers behind creating the Data Unleashed™ service platform. Given our extensive healthcare focus, we have deep experience working with companies on Medicaid initiatives, such as those involving dual eligibles, for instance the FIDA program in New York State.

We will be demonstrating what we mean by lightweight data federation and agile analytics as the drivers behind creating the Data Unleashed™ service platform. Given our extensive healthcare focus, we have deep experience working with companies on Medicaid initiatives, such as those involving dual eligibles, for instance the FIDA program in New York State.

Beyond data integration and analytics, we provide expertise for plans to: implement business process and business rule management solutions; prepare for site reviews and audits; and unify data from a variety of internal and cloud-based systems. More broadly beyond Medicaid, we work extensively in the Medicare and commercial healthcare space, leading transformative change for businesses such as Medicare Administrative Contractors (MACs) and Blues plans.

We look forward to having a chance to learn more about your operational challenges and share with you our organization’s background and focus areas. Let’s get together and explore opportunities to advance your organization’s strategic goals around improving quality of care and reducing costs.

Tags: conference, data, healthcare, ontology, semantics

Posted in Cognitive Corporation, Data, Data Analytics, Data Unleashed, Healthcare Plan, Medicaid, Medicare, Semantic Technology | No Comments »

Friday, April 25th, 2014

I’m glad you asked. S cott Van Buren and I will be presenting a Dataversity webinar entitled, Using Semantic Technology to Drive Agile Analytics, on exactly that topic. Scheduled for May 14, 2014 (and available for replay afterwards), this webinar will highlight key semantic technology capabilities and how those provide an environment for data agility.

cott Van Buren and I will be presenting a Dataversity webinar entitled, Using Semantic Technology to Drive Agile Analytics, on exactly that topic. Scheduled for May 14, 2014 (and available for replay afterwards), this webinar will highlight key semantic technology capabilities and how those provide an environment for data agility.

We will focus most of the webinar on a case study that demonstrates the agility of semantic technology being used to conduct data analysis within a healthcare payer organization. Healthcare expertise is not required in order to understand the case study.

As we look into several iterations of data federation and analysis, we will see the effectiveness of bringing the right subset of data together at the right time for a particular data-centric use. This concept translates well to businesses that have multiple sets of data or applications, including data from third parties, and seek to combine relevant subsets of that information for reporting or analytics. Further, we will see how this augments data warehousing projects, where the lightweight and agile data federation approach informs the warehouse design.

As we look into several iterations of data federation and analysis, we will see the effectiveness of bringing the right subset of data together at the right time for a particular data-centric use. This concept translates well to businesses that have multiple sets of data or applications, including data from third parties, and seek to combine relevant subsets of that information for reporting or analytics. Further, we will see how this augments data warehousing projects, where the lightweight and agile data federation approach informs the warehouse design.

Please plan to join us virtually on May 14 as we describe semantic technology, lightweight data federation and agile data analytics. There will also be time for you to pose questions and delve into areas of interest that we do not cover in our presentation.

The webinar registration page is: http://content.dataversity.net/051414BlueslateWebinar_DVRegistrationPage.html

We look forward to having the opportunity to share our data agility thoughts and experiences with you.

Tags: agile analytics, data, lightweight data federation, ontology, semantics, teaching, webinar

Posted in Architecture, Data, Data Analytics, Data Unleashed, Information Systems, Semantic Technology, Tools and Applications | No Comments »

Friday, April 18th, 2014

I am thrilled to have been invited back to participate at the Semantic Technology and Business (SemTechBiz) conference. This is the premier US conference for learning about, exploring and getting your hands on semantic technology. I’ll be part of a Blue Slate team (including Scott Van Buren and Michael Delaney) who will be conducting a half-day hands-on workshop, Integrating Data Using Semantic Technology, on August 19, 2014. Our mission is to have participants use semantic technology to integrate, federate and perform analysis across several data sources.

I am thrilled to have been invited back to participate at the Semantic Technology and Business (SemTechBiz) conference. This is the premier US conference for learning about, exploring and getting your hands on semantic technology. I’ll be part of a Blue Slate team (including Scott Van Buren and Michael Delaney) who will be conducting a half-day hands-on workshop, Integrating Data Using Semantic Technology, on August 19, 2014. Our mission is to have participants use semantic technology to integrate, federate and perform analysis across several data sources.

We have some work to do to iron out our overall use case, pulling from work we have done with several clients. At a minimum we’ll be working with database schemas, ontologies, reasoners and data analytics tools. It will be a fun and educational experience for attendees.

I’ll post more specifics once the SemTechBiz agenda is published and we have finalized the workshop structure. I hope to see you this August 19-21 in San Jose for our workshop and the amazing learning opportunities throughout the conference.

For more information on the conference, visit its website: http://semtechbizsj2014.semanticweb.com/index.cfm

Tags: conference, data, ontology, semantics, teaching

Posted in Data, Data Analytics, Semantic Technology, Tools and Applications | No Comments »

Friday, August 10th, 2012

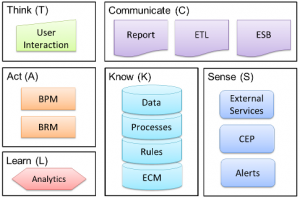

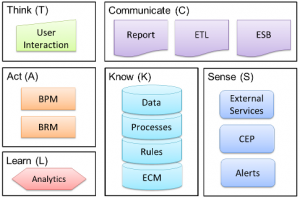

I am excited to share the news that Blue Slate Solutions has kicked off a formal innovation program, creating a lab environment which will leverage the Cognitive Corporation™ framework and apply it to a suite of processes, tools and techniques. The lab will use a broad set of enterprise technologies, applying the learning organization concepts implicit in the Cognitive Corporation’s™ feedback loop.

I’ve blogged a couple of times (see references at the end of this blog entry) about the Cognitive Corporation™. The depiction has changed slightly but the fundamentals of the framework are unchanged.

The focus is to create a learning enterprise, where the learning is built into the system integrations and interactions. Enterprises have been investing in these individual components for several years; however they have not truly been integrating them in a way to promote learning.

The focus is to create a learning enterprise, where the learning is built into the system integrations and interactions. Enterprises have been investing in these individual components for several years; however they have not truly been integrating them in a way to promote learning.

By “integrating” I mean allowing the system to understand the meaning of the data being passed between them. Creating a screen in a workflow (BPM) system that presents data from a database to a user is not “integration” in my opinion. It is simply passing data around. This prevents the enterprise ecosystem (all the components) from working together and collectively learning.

I liken such connections to my taking a hand-written note in a foreign language, which I don’t understand, and typing the text into an email for someone who does understand the original language. Sure, the recipient can read it, but I, representing the workflow tool passing the information from database (note) to screen (email) in this case, have no idea what the data means and cannot possibly participate in learning from it. Integration requires understanding. Understanding requires defined and agreed-upon semantics.

This is just one of the Cognitive Corporation™ concepts that we will be exploring in the lab environment. We will also be looking at the value of these technologies within different horizontal and vertical domains. Given our expertise in healthcare, finance and insurance, our team is well positioned to use the lab to explore the use of learning BPM in many contexts.

(more…)

Tags: BPM, business rules, cognitive corporation, data, enterprise applications, enterprise systems, Information Systems, linkedin, ontology, programming, semantics

Posted in Architecture, BPM, Business Processes, Business Rules, Cognitive Corporation, Data, Data Analytics, Information Systems, Semantic Technology, Software Composition, Software Development, Tools and Applications | No Comments »

Tuesday, October 25th, 2011

The Cognitive Corporation™ is a framework introduced in an earlier posting. The framework is meant to outline a set of general capabilities that work together in order to support a growing and thinking organization. For this post I will drill into one of the least mature of those capabilities in terms of enterprise solution adoption – Learn.

Business rules, decision engines, BPM, complex event processing (CEP), these all invoke images of computers making speedy decisions to the benefit of our businesses. The infrastructure, technologies and software that provide these solutions (SOA, XML schemas, rule engines, workflow engines, etc.) support the decision automation process. However, they don’t know what decisions to make.

The BPM-related components we acquire provide the how of decision making (send an email, route a claim, suggest an offer). Learning, supported by data analytics, provides a powerful path to the what and why of automated decisions (send this email to that person because they are at risk of defecting, route this claim to that underwriter because it looks suspicious, suggest this product to that customer because they appear to be buying these types of items).

I’ll start by outlining the high level journey from data to rules and the cyclic nature of that journey. Data leads to rules, rules beget responses, responses manifest as more data, new data leads to new rules, and so on. Therefore, the journey does not end with the definition of a set of processes and rules. This link between updated data and the determination of new processes and rules is the essence of any learning process, providing a key function for the cognitive corporation.

(more…)

Tags: business intelligence, business rules, cognitive corporation, data, data analytics, Information Systems, linkedin, machine learning

Posted in Architecture, BPM, Business Processes, Business Rules, Cognitive Corporation, Data, Data Analytics, Information Systems, Tools and Applications | No Comments »