MongoDB and Java – Powerful Complementary Platforms

Tuesday, May 31st, 2016I have found that including MongoDB in the design of Java applications allows me a valuable level of flexibility in meeting client objectives. I have created an initial open source project on GitHub, JavaMongo, with the goal of providing working examples of Java and MongoDB integration. A secondary goal is to include development best practices, such as using testing frameworks and good coding style.

This posting is intended to give a little background on why I find Java and MongoDB to be useful tools in my software development arsenal and then to introduce the JavaMongo project. Future postings will include some videos walking developers through the examples as well as the frameworks being used (like JUnit, Cobertura and Checkstyle)

Background

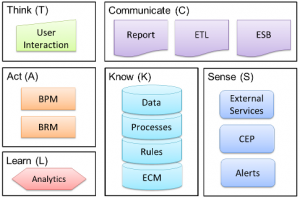

Java is an ubiquitous platform for creating business applications. It has proven itself across a wide range of use cases from small point-based solutions to large generalized solution stacks. The variety of libraries, frameworks and tools for designing, building, testing and managing Java applications provides significant benefits to companies building solutions using Java. However, an application without ready access to data isn’t particularly useful. As enterprise-scale database options have broadened to include NoSQL, those individuals creating Java-based solutions must be sure to take advantage of new data options in order to benefit from the strengths of such components.

MongoDB is a great NoSQL platform that can be used to provide additional capabilities to your applications. MongoDB is a document store that has proven its reliability, scalability and integrate-ability across numerous small and large-scale applications. Its value and focus complements the way we use relational databases for online transaction-oriented processing (OLTP) and offers advantages over the way we use relational databases for data marts and warehouses.

A point of clarification before proceeding: I’m not here to say that MongoDB is better than some other data product, or, more generally, that document stores are better than relational databases. I find such arguments meaningless without a specific use case or project goal. These technologies are different and have individual strengths and weaknesses in the face of a specific set of project objectives.

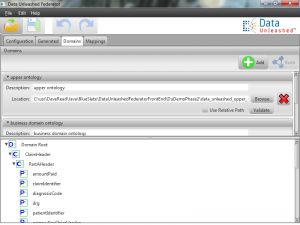

I have found that MongoDB plugs in well when I need a place to federate data (structured, semi-structured and unstructured). Given a common platform, it simplifies the work required to build and alter connections between attributes. If you’ve looked at other information about my background you’ll see that I find the use of semantic technology to be incredibly valuable for data federation and classification. MongoDB as a flexible repository plays well with semantics. At the end of this post I’ll give you a small example of that.

JavaMongo Project

The JavaMongo project is intended to provide Java developers with working examples of Java and MongoDB integrations. Over time I expect a variety of common situations to be demonstrated, with associated documentation explaining the use case and the resulting implementation.

In order to have some interesting data to work with, I’m using data sets that my company releases to the public domain. In order to work with the JavaMongo examples you’ll need to import that data into your MongoDB instance. For more information about downloading and importing the sample data, see the discussion on MongoDB Collection of Honeypot Data on my NoSQL topic page.

The initial JavaMongo project contains a basic README file with information on running the example code. Instead of rehashing that information in this post, I’d like to walk through the basic operations being demonstrated in the example code. The main class we’ll explore is BasicStatistics (us.daveread.education.mongo.honeypot.BasicStatistics).

As you know, a Java program starts execution with the main() method. We see that the first step that the BasicStatistics’ main() method takes is to create an instance of the BasicStatictics class.

BasicStatistics Constructor

The constructor code goes through the entire process of connecting to a MongoDB database, accessing a collection and running a query on data in the collection.

First, an instance of MongoClientOptions is created. This class allows us to configure certain client side options related to the connection. I’ll get into more detail with this in future examples. In this case, the program is simply setting the connection timeout to 2000 milliseconds (2 seconds) so that if the instance is not available the program won’t hang for a long time. You wouldn’t make the timeout this short in a production environment but it helps for debugging our local environment by failing fast if something is wrong.