Medicaid Managed Care Congress Conversations Highlight the Value of Data Federation

Thursday, May 22nd, 2014This week I had the opportunity to attend the Medicaid Managed Care Congress (MMCC) in Baltimore, MD and the privilege of speaking with a variety of leaders from provider, payer, and services organizations. With me from Blue Slate Solutions were Scott Van Buren and Chris Garber. A common theme we heard as we spoke with the attendees was the challenge of bringing data together from multiple sources and making sense of that information.

Medicaid is potentially the most complex government program that exists in the United States. There are federal and state aspects as well as portions that are handled at a local level. Some funding and services are defined as required while others are optional. The financial models’ formulas involve many variables. In short, there are numerous challenges in Medicaid, including the dual eligible changes that seek to address the services disconnects that often exist when a person is eligible for both Medicare and Medicaid.

Combining data from providers, payers, patients, government entities and the community are all necessary in order to optimize the quality of care that is provided to each patient. The definition of provider continues to expand, covering not just the medical needs of a person but incorporating the various social services, so important to the holistic care of an individual, under the umbrella of “provider.”

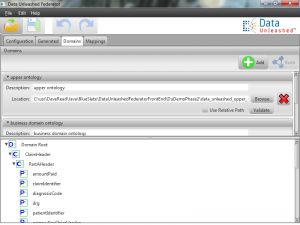

As we listened to people and talked about their data challenges we were also able to walk them through the Data Unleashed™ approach. The iterative learn-as-you-go process resonated across the board, whether people represented patient advocacy groups, provider organizations or healthcare plans. The capability to start small, obtain value quickly and adapt rapidly to changing environments fits the Medicaid complexities well.

If you would like to learn more about our agile and lightweight approach to accessing data from across your enterprise in order to quickly begin creating meaningful reporting and analytics, please check out dataunleashed.com for descriptions, videos and case studies. We’d also appreciate the opportunity to host a webinar with your team where we can explore Data Unleashed™ in more depth and discuss your specific data challenges.