Posts Tagged ‘enterprise applications’

Thursday, April 3rd, 2014

Data Unleashed™. The name expresses a vision of data freed from its shackles so that it can be quickly and iteratively accessed, related, studied and expanded. In order to achieve that vision, the process of combining, or federating, the data must be lightweight. That is, the approach must facilitate rapid data set expansion and on-the-fly relationship changes so that we may quickly derive insights. Conversely, the process must not include a significant investment in data structure design since agility requires that we avoid a rigid structure.

Over the past year Blue Slate Solutions has been advancing its processes and technology to support this vision, which comprises the integration between components in our Cognitive Corporation® framework. More recently we have invested in an innovation development project to take our data integration experiences and semantic technology expertise and create a service offering backed by a lightweight data federation platform. Our platform, Data Unleashed™, enables us to partner with customers who are seeking an agile, lightweight enhancement to traditional data warehousing.

I want to emphasize that we believe that the Data Unleashed™ approach to data federation works in tandem with traditional Data Warehouses (DW) and other well-defined data federation options. It offers agility around data federation, benefiting focused data needs for which warehouses are overkill while supporting a process for iteratively deriving value using a lightweight data warehouse™ approach that informs a broader warehousing solution.

At a couple of points below I emphasize differences between Data Unleashed™ and a traditional DW. This is not meant to disparage the value of a DW but to explain why we feel that Data Unleashed™ adds a set of data federation capabilities to those of the DW.

As an aside, Blue Slate is producing a set of videos specifically about semantic technology, which is a core component of Data Unleashed™. The video series, “Semantic Technology, An Enterprise Introduction,” will be organized in two tracks, business-centric and technology-centric. Our purpose in creating these is to promote a holistic understanding of the value that semantics brings to an organization. The initial video provides an overview of the series.

What is Data Unleashed™ All About?

Data Unleashed™ is based on four key premises:

- the variety of data and data sources that are valuable to a business continue to grow;

- only a subset of the available data is valuable for a specific reporting or analytic need;

- integration and federation of data must be based on meaning in order to support new insights and understanding; and

- lightweight data federation, which supports rapid feedback regarding data value, quality and relationships speeds the process of developing a valuable data set.

I’ll briefly describe our thinking around each of these points. Future posts will go into more depth about Data Unleashed™ as well. In addition, several Blue Slate leaders will be posting their thoughts about this offering and platform.

Tags: cognitive corporation, data, enterprise applications, enterprise systems, Information Systems, lightweight data federation, lightweight data warehouse, ontology, semantics

Posted in Architecture, Cognitive Corporation, Data, Data Unleashed, Information Systems, Semantic Technology | No Comments »

Monday, July 8th, 2013

At this year’s Semantic Technology and Business Conference in San Francisco, Mike Delaney and I presented a session discussing Semantic Technology adoption in the enterprise entitled, When to Consider Semantic Technology for Your Enterprise. Our focus in the talk was centered on 3 key messages: 1) describe semantic technology as it relates to enterprise data and applications; 2) discuss where semantic technology augments current data persistence and access technologies; and 3) highlight situations that should lead an enterprise to begin using semantic technology as part of their enterprise architecture.

In order to allow a broader audience to benefit from our session we are creating a set of videos based on our original presentation. These are being released as part of Blue Slate Solutions’ Experts Exchange Series. Each video will be 5 to 10 minutes in length and will focus on one of the sub-topics from the presentation.

Here is the overall agenda for the video series:

| # |

Title |

Description |

| 1 |

Introduction |

Meet the presenters and the topic |

| 2 |

What? |

Define Semantic Technology in the context of these videos |

| 3 |

What’s New? |

Compare semantic technology to relational and NoSQL technologies |

| 4 |

Where? |

Discuss the ecosystem and maturity of vendors in the semantic technology space |

| 5 |

Why? |

Explain the enterprise strengths of semantic technology |

| 6 |

When? |

Identify opportunities to exploit semantic technology in the enterprise |

| 7 |

When Not? |

Avoid misusing semantic technology |

| 8 |

Case Study |

Look at one of our semantic technology projects |

| 9 |

How? |

Get started with semantic technology |

We’ll release a couple of videos every other week so be on the lookout during July and August for this series to be completed. We would appreciate your feedback on the information as well as hearing about your experiences deploying semantic technology as part of an enterprise’s application architecture.

The playlist for the series is located at: http://www.youtube.com/playlist?list=PLyQYGnkKpiugIl0Tz0_ZlmeFhbWQ4XE1I The playlist will be updated with the new videos as they are released.

Tags: data, enterprise applications, Information Systems, ontology, semantics, system integration

Posted in Architecture, Semantic Technology | No Comments »

Tuesday, August 14th, 2012

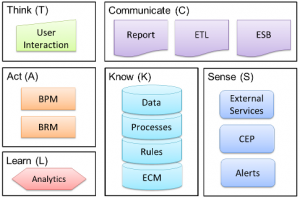

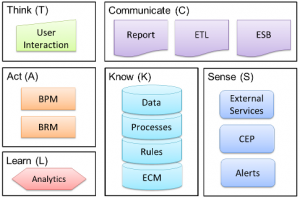

When depicting the Cognitive Corporation™ as a graphic, the use of semantic technology is not highlighted. Semantic technology serves two key roles in the Cognitive Corporation™ – data storage (part of Know) and data integration, which connects all of the concepts. I’ll explore the integration role since it is a vital part of supporting a learning organization.

In my last post I talked about the fact that integration between components has to be based on the meaning of the data, not simply passing compatible data types between systems. Semantic technology supports this need through its design. What key capabilities does semantic technology offer in support of integration? Here I’ll highlight a few.

Logical and Physical Structures are (largely) Separate

Semantic technology reduces the tie between the logical and physical structures of the data versus a relational database. In a relational database it is the physical structure (columns and tables) along with the foreign keys that maintain the relationships in the data. Just think back to relational database design class, in a normalized database all of the column values are related to the table’s key.

This tight tie between data relationships (logical) and data structure (physical) imposes a steep cost if a different set of logical data relationships is desired. Traditionally, we create data marts and data warehouses to allow us to represent multiple logical data relationships. These are copies of the data with differing physical structures and foreign key relationships. We may need these new structures to allow us to report differently on our data or to integrate with different systems which need the altered logical representations.

With semantic data we can take a physical representation of the data (our triples) and apply different logical representations in the form of ontologies. To be fair, the physical structure (subject->predicate->object) forces certain constrains on the ontology but a logical transformation is far simpler than a physical one even with such constraints.

(more…)

Tags: cognitive corporation, data, enterprise applications, Information Systems, linkedin, ontology, semantics, system integration

Posted in Architecture, Cognitive Corporation, Data, Information Systems, Semantic Technology, Software Composition | No Comments »

Friday, August 10th, 2012

I am excited to share the news that Blue Slate Solutions has kicked off a formal innovation program, creating a lab environment which will leverage the Cognitive Corporation™ framework and apply it to a suite of processes, tools and techniques. The lab will use a broad set of enterprise technologies, applying the learning organization concepts implicit in the Cognitive Corporation’s™ feedback loop.

I’ve blogged a couple of times (see references at the end of this blog entry) about the Cognitive Corporation™. The depiction has changed slightly but the fundamentals of the framework are unchanged.

The focus is to create a learning enterprise, where the learning is built into the system integrations and interactions. Enterprises have been investing in these individual components for several years; however they have not truly been integrating them in a way to promote learning.

The focus is to create a learning enterprise, where the learning is built into the system integrations and interactions. Enterprises have been investing in these individual components for several years; however they have not truly been integrating them in a way to promote learning.

By “integrating” I mean allowing the system to understand the meaning of the data being passed between them. Creating a screen in a workflow (BPM) system that presents data from a database to a user is not “integration” in my opinion. It is simply passing data around. This prevents the enterprise ecosystem (all the components) from working together and collectively learning.

I liken such connections to my taking a hand-written note in a foreign language, which I don’t understand, and typing the text into an email for someone who does understand the original language. Sure, the recipient can read it, but I, representing the workflow tool passing the information from database (note) to screen (email) in this case, have no idea what the data means and cannot possibly participate in learning from it. Integration requires understanding. Understanding requires defined and agreed-upon semantics.

This is just one of the Cognitive Corporation™ concepts that we will be exploring in the lab environment. We will also be looking at the value of these technologies within different horizontal and vertical domains. Given our expertise in healthcare, finance and insurance, our team is well positioned to use the lab to explore the use of learning BPM in many contexts.

(more…)

Tags: BPM, business rules, cognitive corporation, data, enterprise applications, enterprise systems, Information Systems, linkedin, ontology, programming, semantics

Posted in Architecture, BPM, Business Processes, Business Rules, Cognitive Corporation, Data, Data Analytics, Information Systems, Semantic Technology, Software Composition, Software Development, Tools and Applications | No Comments »

Monday, September 26th, 2011

Given my role as an enterprise architect, I’ve had the opportunity to work with many different business leaders, each focused on leveraging IT to drive improved efficiencies, lower costs, increase quality, and broaden market share throughout their businesses. The improvements might involve any subset of data, processes, business rules, infrastructure, software, hardware, etc. A common thread is that each project seeks to make the corporation smarter through the use of information technology.

As I’ve placed these separate projects into a common context of my own, I’ve concluded that the long term goal of leveraging information technology must be for it to support cognitive processes. I don’t mean that the computers will think for us, rather that IT solutions must work together to allow a business to learn, corporately.

The individual tools that we utilize each play a part. However, we tend to utilize them in a manner that focuses on isolated and directed operation rather than incorporating them into an overall learning loop. In other words, we install tools that we direct without asking them to help us find better directions to give.

Let me start with a definition: similar to thinking beings, a cognitive corporation™ leverages a feedback loop of information and experiences to inform future processes and rules. Fundamentally, learning is a process and it involves taking known facts and experiences and combining them to create new hypothesis which are tested in order to derive new facts, processes and rules. Unfortunately, we don’t often leverage our enterprise applications in this way.

We have many tools available to us in the enterprise IT realm. These include database management systems, business process management environments, rule engines, reporting tools, content management applications, data analytics tools, complex event processing environments, enterprise service buses, and ETL tools. Individually, these components are used to solve specific, predefined issues with the operation of a business. However, this is not an optimal way to leverage them.

If we consider that these tools mimic aspects of an intelligent being, then we need to leverage them in a fashion that manifests the cognitive capability in preference to simply deploying a point-solution. This involves thinking about the tools somewhat differently.

(more…)

Tags: BPM, cognitive corporation, enterprise applications, enterprise systems, Information Systems, linkedin, system integration

Posted in Architecture, BPM, Cognitive Corporation, Information Systems, Tools and Applications | No Comments »

Friday, January 21st, 2011

I was participating in a code review today and was reminded by a senior architect, who started working as an intern for me years ago, of a testing technique I had used with one of his first programs. He had been assigned to create a basic web application that collected some data from a user and wrote it to a database. He came into my office, announced it was done and proudly showed it to me. I walked over to the keyboard, entered a bunch of junk and got a segmentation fault in response.

Although I didn’t have a name for it, that was a standard technique I used when evaluating applications. After all, the tried and true paths, expected inputs and easy errors will be tested early and often as the developer exercises the application using the basic use cases. As Boris Beizer said, “The high-probability paths are always tested if only to demonstrate that the system works properly.” (Beizer, Boris. Software Testing Techniques. Boston, MA: Thomson Computer Press, 1990: 76.)

It is unexpected input that is useful when looking to find untested paths through the code. If someone shows me an application for evaluation the last thing I need to worry about is using it in an expected fashion, everyone else will do that. In fact, I default to entering data outside the specification when looking at a new application. I don’t know that my team always appreciates the approach. They’d probably like to see the application work at least once while I’m in the room.

These days there is a formal name for testing of this type, fuzzing. A few years ago I preferred calling it “gorilla testing” since I liked the mental picture of beating on the application. (Remember the American Tourister luggage ad in the 1970s?) But alas, it appears that fuzzing has become the accepted term.

Fuzzing involves passing input that breaks the expected input “rules”. Those rules could come from some formal requirements, such as a RFC, or informal requirements, such as the set of parameters accepted by an application. Fuzzing tools can use formal standards, extracted patterns and even randomly generated inputs to test an application’s resilience against unexpected or illegal input.

(more…)

Tags: application security, enterprise applications, Information Systems, Internet, linkedin, programming, Security, Testing, vulnerability

Posted in Information Systems, Security, Software Development, Software Security, Testing, Tools and Applications | No Comments »

Thursday, November 18th, 2010

I attended my first semantic web conference this week, the Semantic Web Summit (East) held in Boston. The focus of the event was how businesses can leverage semantic technologies. I was interested in what people were actually doing with the technology. The one and a half days of presentations were informative and diverse.

Our host was Mills Davis, a name that I have encountered frequently during my exploration of the semantic web. He did a great job of keeping the sessions running on time as well as engaging the audience. The presentations were generally crisp and clear. In some cases the speaker presented a product that utilizes semantic concepts, describing its role in the value chain. In other cases we heard about challenges solved with semantic technologies.

My major takeaways were: 1) semantic technologies work and are being applied to a broad spectrum of problems and 2) the potential business applications of these technologies are vast and ripe for creative minds to explore. This all bodes well for people delving into semantic technologies since there is an infrastructure of tools and techniques available upon which to build while permitting broad opportunities to benefit from leveraging them.

As a CTO with 20+ years focused on business environments, including application development, enterprise application integration, data warehousing, and business intelligence I identified most closely with the sessions geared around intra-business and B2B uses of semantic technology. There were other sessions looking a B2C which were well done but not applicable to the world in which I find myself currently working.

Talks by Dennis Wisnosky and Mike Dunn were particularly focused on the business value that can be achieved through the use of semantic technologies. Further, they helped to define basic best practices that they apply to such projects. Dennis in particular gave specific information around his processes and architecture while talking about the enormous value that his team achieved.

Heartening to me was the fact that these best practices, processes and architectures are not significantly different than those used with other enterprise system endeavors. So we don’t need to retool all our understanding of good project management practices and infrastructure design, we just need to internalize where semantic technology best fits into the technology stack.

(more…)

Tags: enterprise applications, enterprise systems, Information Systems, linkedin, semantic web, semantics, system integration

Posted in Architecture, Information Systems, Semantic Web, Software Composition, Tools and Applications | 1 Comment »

Saturday, September 25th, 2010

My last two days at JavaOne 2010 included some interesting sessions as well as spending some time in the pavilion. I’ll mention a few of the session topics that I found interesting as well as some of the products that I intend to check out.

I attended a session on creating a web architecture focused on high-performance with low-bandwidth. The speaker was tasked with designing a web-based framework for the government of Ethiopia. He discussed the challenges that are presented by that country’s infrastructure – consider network speed on the order of 5Kbps between sites. He also had to work with an IT group that, although educated and intelligent, did not have a lot of depth beyond working with an Oracle database’s features.

His solution allows developers to create fully functional web applications that keep exchanged payloads under 10K. Although I understand the logic of the approach in this case, I’m not sure the technique would be practical in situations without such severe bandwidth and skill set limitations.

A basic theme during his talk was to keep the data and logic tightly co-located. In his case it is all located in the database (PL/SQL) but he agreed that it could all be in the application tier (e.g. NoSQL). I’m not convinced that this is a good approach to creating maintainable high-volume applications. It could be that the domain of business applications and business verticals in which I often find myself differ from the use cases that are common to developers promoting the removal of tiers from the stack (whether removing the DB server or the mid-tier logic server).

One part of his approach with which I absolutely concur is to push processing onto the client. The use of the client’s CPU seems common sense to me. The work is around balancing that with security and bandwidth. However, it can be done and I believe we will continue to find more effective ways to leverage all that computer power.

I also enjoyed a presentation on moving data between a data center and the cloud to perform heavy and intermittent processing. The presenters did a great job of describing their trials and successes with leveraging the cloud to perform computationally expensive processing on transient data (e.g. they copy the data up each time they run the process rather than pay to store their data). They also provided a lot of interesting information regarding options, advantages and challenges when leveraging the cloud (Amazon EC2 in this case).

(more…)

Tags: data, enterprise applications, enterprise systems, Information Systems, Internet, Java, linkedin, programming

Posted in Architecture, Information Systems, Java, Software Development, Tools and Applications | No Comments »

Monday, July 26th, 2010

InformationWeek Analytics (http://analytics.informationweek.com/index) invited me to write about the subject of process automation. The article, part of their series covering application architectures, was released in July of this year. It provided an opportunity for me to articulate the key components that are required to succeed in the automation of business processes.

Both the business and IT are positioned to make-or-break the use of process automation tools and techniques. The business must redefine its processes and operational rules so that work may be automated. IT must provide the infrastructure and expertise to leverage the tools of the process automation trade.

Starting with the business there must be clearly defined processes by which work gets done. Each process must be documented, including the points where decisions are made. The rules for those decisions must then be documented. Repetitive, low-value and low-risk decisions are immediate candidates for automation.

A key value point that must be reached in order to extract sustainable and meaningful value from process automation is measured in Straight Through Processing (STP). STP requires that work arrive from a third-party and be automatically processed; returning a final decision and necessary output (letter, claim payment, etc.) without a person being involved in handling the work.

Most businesses begin using process automation tools without achieving any significant STP rate. This is fine as a starting point so long as the business reviews the manual work, identifies groupings of work, focuses on the largest groupings (large may be based on manual effort, cost or simple volume) and looks to automate the decisions surrounding that group of work. As STP is achieved for some work, the review process continues as more and more types of work are targeted for automation.

The end goal of process automation is to have people involved in truly exceptional, high-value, high-risk, business decisions. The business benefits by having people attend to items that truly matter rather than dealing with a large amount background noise that lowers productivity, morale and client satisfaction.

All of this is great in theory but requires an information technology infrastructure that can meet these business objectives.

(more…)

Tags: BPM, business rules, enterprise applications, Information Systems, linkedin, Process Automation, process modeling, system integration, web services, Workflow

Posted in Architecture, Information Systems, Software Development, Tools and Applications | No Comments »

Wednesday, May 12th, 2010

One of the aspects of agile software development that may lead to significant angst is the database. Unlike refactoring code, the refactoring of the database schema involves a key constraint – state! A developer may rearrange code to his or her heart’s content with little worry since the program will start with a blank slate when execution begins. However, the database “remembers.” If one accepts that each iteration of an agile process produces a production release then the stored data can’t be deleted as part of the next iteration.

The refactoring of a database becomes less and less trivial as project development continues. While developers have IDE’s to refactor code, change packages, and alter build targets, there are few tools for refactoring databases.

My definition of a database refactoring tool is one that assists the database developer by remembering the database transformation steps and storing them as part of the project – e.g. part of the build process. This includes both the schema changes and data transformations. Remember that the entire team will need to reproduce these steps on local copies of the database. It must be as easy to incorporate a peer’s database schema changes, without losing data, as it is to incorporate the code changes.

These same data-centric complexities exist in waterfall approaches when going from one version to the next. Whenever the database structure needs to change, a path to migrate the data has to be defined. That transformation definition must become part of the project’s artifacts so that the data migration for the new version is supported as the program moves between environments (test, QA, load test, integrated test, and production). Also, the database transformation steps must be automated and reversible!

That last point, the ability to rollback, is a key part of any rollout plan. We must be able to back out changes. It may be that the approach to a rollback is to create a full database backup before implementing the update, but that assumption must be documented and vetted (e.g. the approach of a full backup to support the rollback strategy may not be reasonable in all cases).

This database refactoring issue becomes very tricky when dealing with multiple versions of an application. The transformation of the database schema and data must be done in a defined order. As more and more data is stored, the process consumes more storage and processing resources. This is the ETL side-effect of any system upgrade. Its impact is simply felt more often (e.g. potentially during each iteration) in an agile project.

As part of exploring semantic technology, I am interested in contrasting this to a database that consists of RDF triples. The semantic relationships of data do not change as often (if at all) as the relational constructs. Many times we refactor a relational database as we discover concepts that require one-to-many or many-to-many relationships.

Is an RDF triple-based database easier to refactor than a relational database? Is there something about the use of RDF triples that reduces the likelihood of a multiplicity change leading to a structural change in the data? If so, using RDF as the data format could be a technique that simplifies the development of applications. For now, let’s take a high-level look at a refactoring use case.

(more…)

Tags: agile development, efficient coding, enterprise applications, enterprise systems, Information Systems, linkedin, ontology, refactoring, semantic web, semantics, system integration

Posted in Architecture, Information Systems, Semantic Web, Software Composition, Software Development, Tools and Applications | 1 Comment »