April 18th, 2014

I am thrilled to have been invited back to participate at the Semantic Technology and Business (SemTechBiz) conference. This is the premier US conference for learning about, exploring and getting your hands on semantic technology. I’ll be part of a Blue Slate team (including Scott Van Buren and Michael Delaney) who will be conducting a half-day hands-on workshop, Integrating Data Using Semantic Technology, on August 19, 2014. Our mission is to have participants use semantic technology to integrate, federate and perform analysis across several data sources.

I am thrilled to have been invited back to participate at the Semantic Technology and Business (SemTechBiz) conference. This is the premier US conference for learning about, exploring and getting your hands on semantic technology. I’ll be part of a Blue Slate team (including Scott Van Buren and Michael Delaney) who will be conducting a half-day hands-on workshop, Integrating Data Using Semantic Technology, on August 19, 2014. Our mission is to have participants use semantic technology to integrate, federate and perform analysis across several data sources.

We have some work to do to iron out our overall use case, pulling from work we have done with several clients. At a minimum we’ll be working with database schemas, ontologies, reasoners and data analytics tools. It will be a fun and educational experience for attendees.

I’ll post more specifics once the SemTechBiz agenda is published and we have finalized the workshop structure. I hope to see you this August 19-21 in San Jose for our workshop and the amazing learning opportunities throughout the conference.

For more information on the conference, visit its website: http://semtechbizsj2014.semanticweb.com/index.cfm

Tags: conference, data, ontology, semantics, teaching

Posted in Data, Data Analytics, Semantic Technology, Tools and Applications | No Comments »

April 15th, 2014

I routinely receive emails, tweets and snail mail from IT vendors that focus on how their solution accelerates the creation of business applications. They will quote executives and technology leaders, citing case studies that compare the time to build an application on their platform versus others. They will make the claim that this speed to release proves that their platform, tool or solution is “better” than the competition. Further, they claim that it will provide similar value for my business’ application needs. The focus of these advertisements is consistently, “how long did it take to initially create some application.”

This speed-to-create metric is pointless for a couple of reasons. First, an experienced developer will be fast when throwing together a solution using his or her preferred tools. Second, an application spends years in maintenance versus the time spent to build its first version.

Build it fast!

Years ago I built applications for GE in C. I was fast. Once I had a good set of libraries, I could build applications for turbine parts catalogs in days. This was before windowing operating systems. There were frameworks from companies like Borland that made it trivial to create an interactive interface. I moved on to Visual Basic and SQLWindows development and was equally fast at creating client-server applications for GE’s field engineering team. I progressed to C++ and created CGI-based web applications. Again, building and deploying applications in days. Java followed, and I created and deployed applications using the leading edge Netscape browser and Java Applets in days and eventually hours for trivial interfaces.

Since 2000 I’ve used BPM and BRM platforms such as PegaRULES, Corticon, Appian and ILOG. I’ve developed applications using frameworks like Struts, JSF, Spring, Hibernate and the list goes on. Through all of this, I’ve lived the euphoria of the initial release and the pain of refactoring for release 2. In my experience not one of these platforms has simplified the refactoring of a weak design without a significant investment of time.

Speed to initial release is not a meaningful measure of a platform’s ability to support business agility. There is little pain in version 1 regardless of the design thought that goes into it. Agility is about versions 2 and beyond. Specifically, we need to understand what planning and practices during prior versions is necessary to promote agility in future versions.

Tags: BPM, efficient coding, Information Systems, programming

Posted in BPM, Information Systems, Software Composition, Software Development | No Comments »

April 12th, 2014

There has been a lot of information flying about on the Internet concerning the Heartbleed vulnerability in the OpenSSL library. Among system administrators and software developers there is a good understanding of exactly what happened, the potential data losses and proper mitigation processes. However, I’ve seen some inaccurate descriptions and discussion in less technical settings.

There has been a lot of information flying about on the Internet concerning the Heartbleed vulnerability in the OpenSSL library. Among system administrators and software developers there is a good understanding of exactly what happened, the potential data losses and proper mitigation processes. However, I’ve seen some inaccurate descriptions and discussion in less technical settings.

I thought I would attempt to explain the Heartbleed issue at a high level without focusing on the implementation details. My goal is to help IT and business leaders understand a little bit about how the vulnerability is exploited, why it puts sensitive information at risk and how this relates to their own software development shops.

Heartbleed is a good case study for developers who don’t always worry about data security, feeling that attacks are hard and vulnerabilities are rare. This should serve as a wake-up-call that programs need to be tested in two ways – for use cases and misuse cases. We often focus on use cases, “does the program do what we want it to do?” Less frequently do we test for misuse cases, “does the program do things we don’t want it to do?” We need to do more of the latter.

I’ve created a 10 minute video to walk through Heartbleed. It includes the parable of a “trusting change machine.” The parable is meant to explain the Heartbleed mechanics without requiring that the viewer be an expert in programming or data encryption.

I’ve created a 10 minute video to walk through Heartbleed. It includes the parable of a “trusting change machine.” The parable is meant to explain the Heartbleed mechanics without requiring that the viewer be an expert in programming or data encryption.

If you have thoughts about ways to clarify concepts like Heartbleed to a wider audience, please feel free to comment. Data security requires cooperation throughout an organization. Effective and accurate communication is vital to achieving that cooperation.

Here are the links mentioned in the video:

Tags: application security, data security, Information Systems, Internet, mitigation, programming, Security, Testing, vulnerability

Posted in Information Systems, Security, Software Development, Software Security, Testing | No Comments »

April 3rd, 2014

Data Unleashed™. The name expresses a vision of data freed from its shackles so that it can be quickly and iteratively accessed, related, studied and expanded. In order to achieve that vision, the process of combining, or federating, the data must be lightweight. That is, the approach must facilitate rapid data set expansion and on-the-fly relationship changes so that we may quickly derive insights. Conversely, the process must not include a significant investment in data structure design since agility requires that we avoid a rigid structure.

Over the past year Blue Slate Solutions has been advancing its processes and technology to support this vision, which comprises the integration between components in our Cognitive Corporation® framework. More recently we have invested in an innovation development project to take our data integration experiences and semantic technology expertise and create a service offering backed by a lightweight data federation platform. Our platform, Data Unleashed™, enables us to partner with customers who are seeking an agile, lightweight enhancement to traditional data warehousing.

I want to emphasize that we believe that the Data Unleashed™ approach to data federation works in tandem with traditional Data Warehouses (DW) and other well-defined data federation options. It offers agility around data federation, benefiting focused data needs for which warehouses are overkill while supporting a process for iteratively deriving value using a lightweight data warehouse™ approach that informs a broader warehousing solution.

At a couple of points below I emphasize differences between Data Unleashed™ and a traditional DW. This is not meant to disparage the value of a DW but to explain why we feel that Data Unleashed™ adds a set of data federation capabilities to those of the DW.

As an aside, Blue Slate is producing a set of videos specifically about semantic technology, which is a core component of Data Unleashed™. The video series, “Semantic Technology, An Enterprise Introduction,” will be organized in two tracks, business-centric and technology-centric. Our purpose in creating these is to promote a holistic understanding of the value that semantics brings to an organization. The initial video provides an overview of the series.

What is Data Unleashed™ All About?

Data Unleashed™ is based on four key premises:

- the variety of data and data sources that are valuable to a business continue to grow;

- only a subset of the available data is valuable for a specific reporting or analytic need;

- integration and federation of data must be based on meaning in order to support new insights and understanding; and

- lightweight data federation, which supports rapid feedback regarding data value, quality and relationships speeds the process of developing a valuable data set.

I’ll briefly describe our thinking around each of these points. Future posts will go into more depth about Data Unleashed™ as well. In addition, several Blue Slate leaders will be posting their thoughts about this offering and platform.

Tags: cognitive corporation, data, enterprise applications, enterprise systems, Information Systems, lightweight data federation, lightweight data warehouse, ontology, semantics

Posted in Architecture, Cognitive Corporation, Data, Data Unleashed, Information Systems, Semantic Technology | No Comments »

February 14th, 2014

I recently purchased a Wacom Intuos tablet to connect to my computer as a tool to allow real-time annotation on slides during a presentation. These presentations could be recorded or live in nature. Using the mouse or touchpad was too limiting and magnified my already poor penmanship.

I recently purchased a Wacom Intuos tablet to connect to my computer as a tool to allow real-time annotation on slides during a presentation. These presentations could be recorded or live in nature. Using the mouse or touchpad was too limiting and magnified my already poor penmanship.

Once I had the tablet hooked up it was easy to annotate using PowerPoint’s own menus for selecting the mode (pen, highlighter) and colors. However, the navigation to access those features required traversing through an on-screen menu each time the pen was being selected or whenever I wanted to change the pen color. This was a real nuisance and meant that there would be an on-screen distraction and presentation delay whenever I had to navigate through the menu.

I hunted around for an alternative one-click shortcut approach and could not find one. Maybe I missed it but I finally decided to see if I could use PowerPoint macros and active shapes to give me a simple way to select pens and colors. I did get it to function and it works well for my purposes.

I’ve documented and demonstrated what I did in a short video. I’m sharing it in case others are looking for an option to do something similar. Once you get the framework in place, it provides the flexibility to use macros for more than just pen color control, but I’ll leave that to the reader’s and viewer’s imagination.

The video is located at: http://monead.com/video/WacamIntuosTabletandPptAnnotations/

The basic pen color macros that I use in the video are located at: http://monead.com/ppt_pen_macros.txt

I’d enjoy hearing if you have alternative ways to accomplish this or find interesting ways to apply the technique to other presentation features. Happy presenting!

Tags: annotate, drawing tablet, education, powerpoint, presentation

Posted in Tools and Applications | No Comments »

July 8th, 2013

At this year’s Semantic Technology and Business Conference in San Francisco, Mike Delaney and I presented a session discussing Semantic Technology adoption in the enterprise entitled, When to Consider Semantic Technology for Your Enterprise. Our focus in the talk was centered on 3 key messages: 1) describe semantic technology as it relates to enterprise data and applications; 2) discuss where semantic technology augments current data persistence and access technologies; and 3) highlight situations that should lead an enterprise to begin using semantic technology as part of their enterprise architecture.

In order to allow a broader audience to benefit from our session we are creating a set of videos based on our original presentation. These are being released as part of Blue Slate Solutions’ Experts Exchange Series. Each video will be 5 to 10 minutes in length and will focus on one of the sub-topics from the presentation.

Here is the overall agenda for the video series:

| # |

Title |

Description |

| 1 |

Introduction |

Meet the presenters and the topic |

| 2 |

What? |

Define Semantic Technology in the context of these videos |

| 3 |

What’s New? |

Compare semantic technology to relational and NoSQL technologies |

| 4 |

Where? |

Discuss the ecosystem and maturity of vendors in the semantic technology space |

| 5 |

Why? |

Explain the enterprise strengths of semantic technology |

| 6 |

When? |

Identify opportunities to exploit semantic technology in the enterprise |

| 7 |

When Not? |

Avoid misusing semantic technology |

| 8 |

Case Study |

Look at one of our semantic technology projects |

| 9 |

How? |

Get started with semantic technology |

We’ll release a couple of videos every other week so be on the lookout during July and August for this series to be completed. We would appreciate your feedback on the information as well as hearing about your experiences deploying semantic technology as part of an enterprise’s application architecture.

The playlist for the series is located at: http://www.youtube.com/playlist?list=PLyQYGnkKpiugIl0Tz0_ZlmeFhbWQ4XE1I The playlist will be updated with the new videos as they are released.

Tags: data, enterprise applications, Information Systems, ontology, semantics, system integration

Posted in Architecture, Semantic Technology | No Comments »

September 3rd, 2012

I don’t often purchase dairy goods at Target. Today was an exception. Usually I head to Target for videos, office supplies, gift cards, Halloween decorations and so forth. On this occasion I needed some shipping boxes, birthday napkins and eggs. Rather than stop at two stores I decided to get the eggs at Target.

So why the posting? Well, either my math skills are getting really poor (probably due to my relentless exposure to computers and calculators) or Target has some issue with understanding egg pricing. Here are pictures of the price labels in the dairy section (taken at the Target in Clifton Park, NY on September 3, 2012). Anything look askew?

Now, if the errors were consistent I guess I could understand. After all converting from “12 eggs” to “price per dozen” takes some understanding of the word “dozen” along with the principle of unit pricing. What I find interesting is that the unit pricing calculation for these eggs is somewhat cracked since it does not seem to follow a pattern. Also, apparently no one has noticed these strange unit prices.

Read the rest of this entry »

Tags: consumer awareness, linkedin, unit pricing

Posted in Consumer, Quality | No Comments »

August 14th, 2012

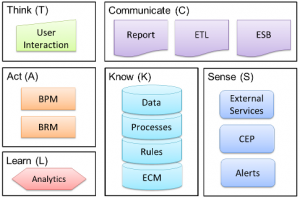

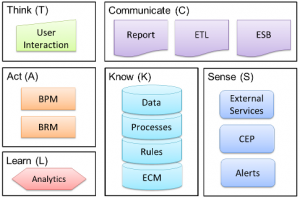

When depicting the Cognitive Corporation™ as a graphic, the use of semantic technology is not highlighted. Semantic technology serves two key roles in the Cognitive Corporation™ – data storage (part of Know) and data integration, which connects all of the concepts. I’ll explore the integration role since it is a vital part of supporting a learning organization.

In my last post I talked about the fact that integration between components has to be based on the meaning of the data, not simply passing compatible data types between systems. Semantic technology supports this need through its design. What key capabilities does semantic technology offer in support of integration? Here I’ll highlight a few.

Logical and Physical Structures are (largely) Separate

Semantic technology reduces the tie between the logical and physical structures of the data versus a relational database. In a relational database it is the physical structure (columns and tables) along with the foreign keys that maintain the relationships in the data. Just think back to relational database design class, in a normalized database all of the column values are related to the table’s key.

This tight tie between data relationships (logical) and data structure (physical) imposes a steep cost if a different set of logical data relationships is desired. Traditionally, we create data marts and data warehouses to allow us to represent multiple logical data relationships. These are copies of the data with differing physical structures and foreign key relationships. We may need these new structures to allow us to report differently on our data or to integrate with different systems which need the altered logical representations.

With semantic data we can take a physical representation of the data (our triples) and apply different logical representations in the form of ontologies. To be fair, the physical structure (subject->predicate->object) forces certain constrains on the ontology but a logical transformation is far simpler than a physical one even with such constraints.

Read the rest of this entry »

Tags: cognitive corporation, data, enterprise applications, Information Systems, linkedin, ontology, semantics, system integration

Posted in Architecture, Cognitive Corporation, Data, Information Systems, Semantic Technology, Software Composition | No Comments »

August 10th, 2012

I am excited to share the news that Blue Slate Solutions has kicked off a formal innovation program, creating a lab environment which will leverage the Cognitive Corporation™ framework and apply it to a suite of processes, tools and techniques. The lab will use a broad set of enterprise technologies, applying the learning organization concepts implicit in the Cognitive Corporation’s™ feedback loop.

I’ve blogged a couple of times (see references at the end of this blog entry) about the Cognitive Corporation™. The depiction has changed slightly but the fundamentals of the framework are unchanged.

The focus is to create a learning enterprise, where the learning is built into the system integrations and interactions. Enterprises have been investing in these individual components for several years; however they have not truly been integrating them in a way to promote learning.

The focus is to create a learning enterprise, where the learning is built into the system integrations and interactions. Enterprises have been investing in these individual components for several years; however they have not truly been integrating them in a way to promote learning.

By “integrating” I mean allowing the system to understand the meaning of the data being passed between them. Creating a screen in a workflow (BPM) system that presents data from a database to a user is not “integration” in my opinion. It is simply passing data around. This prevents the enterprise ecosystem (all the components) from working together and collectively learning.

I liken such connections to my taking a hand-written note in a foreign language, which I don’t understand, and typing the text into an email for someone who does understand the original language. Sure, the recipient can read it, but I, representing the workflow tool passing the information from database (note) to screen (email) in this case, have no idea what the data means and cannot possibly participate in learning from it. Integration requires understanding. Understanding requires defined and agreed-upon semantics.

This is just one of the Cognitive Corporation™ concepts that we will be exploring in the lab environment. We will also be looking at the value of these technologies within different horizontal and vertical domains. Given our expertise in healthcare, finance and insurance, our team is well positioned to use the lab to explore the use of learning BPM in many contexts.

Read the rest of this entry »

Tags: BPM, business rules, cognitive corporation, data, enterprise applications, enterprise systems, Information Systems, linkedin, ontology, programming, semantics

Posted in Architecture, BPM, Business Processes, Business Rules, Cognitive Corporation, Data, Data Analytics, Information Systems, Semantic Technology, Software Composition, Software Development, Tools and Applications | No Comments »

June 14th, 2012

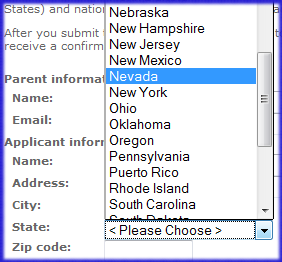

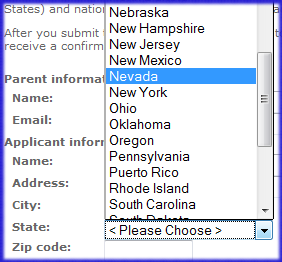

This post certainly falls into the “nitpick” category, but the flaw occurs often enough to be somewhat irritating. The problem you ask? Drop-down lists of state names that are not ordered by the state name but instead by the state’s 2-letter postal abbreviation. Granted, this error pales in comparison to applications containing SQL injection flaws or race conditions exposing personal information, but I’m going to complain none-the-less.

What exactly is the issue? Well, it turns out that the two letter postal abbreviations (for example AK for Alaska and HI for Hawaii) can’t be used as the key for sorting the state names into alphabetical order. For the most part it works, however for some states, such as Nevada through New Mexico it breaks. As a New York resident I get tripped up by this.

The image shown here is a web form for a college admissions site. As you can see, Nevada follows New Hampshire, New Jersey and New Mexico but precedes New York. In reality it should follow Nebraska and precede New Hampshire. This order is incorrect; it is based on the state abbreviations. If instead of state names the website were displaying the state abbreviations, the order would be NH, NJ, NM, NV, NY and all would be fine.

The image shown here is a web form for a college admissions site. As you can see, Nevada follows New Hampshire, New Jersey and New Mexico but precedes New York. In reality it should follow Nebraska and precede New Hampshire. This order is incorrect; it is based on the state abbreviations. If instead of state names the website were displaying the state abbreviations, the order would be NH, NJ, NM, NV, NY and all would be fine.

The developer(s) of this site are not alone in their mistaken use of the postal abbreviation as the sort key. I’ve encountered this issue with online shopping sites, reservation systems and survey forms. I typically do a quick “view source” of the site and invariably they are using the state abbreviation as the actual value being passed to the server. I’m sure they are using that for sorting as well.

You might think this sort of thing doesn’t matter. From my point of view it represents a “broken window,” using Andy Hunt’s and Dave Thomas’ language from The Pragmatic Programmer. Little things count. Little things left uncorrected form an environment where developers may become more and more sloppy. After all, if I don’t need to pay attention to my sort key for state, what’s to say I won’t make a similar mistake with country or a product list or any other collection of values that is supposed to be ordered to make access easier?

Please, if you are designing an input form, make sure that sorted information displayed by your widgets is sorted by the display value, not some internal code. It will make the use of your form easier for users and garner the respect of your fellow developers.

Have you seen this flaw on websites you’ve visited? Do you have pet peeves with online form designs? I’d enjoy hearing about them.

Tags: linkedin, online forms, programming, quality, sorting, user interface

Posted in Quality, Software Development | No Comments »

I am thrilled to have been invited back to participate at the Semantic Technology and Business (SemTechBiz) conference. This is the premier US conference for learning about, exploring and getting your hands on semantic technology. I’ll be part of a Blue Slate team (including Scott Van Buren and Michael Delaney) who will be conducting a half-day hands-on workshop, Integrating Data Using Semantic Technology, on August 19, 2014. Our mission is to have participants use semantic technology to integrate, federate and perform analysis across several data sources.

I am thrilled to have been invited back to participate at the Semantic Technology and Business (SemTechBiz) conference. This is the premier US conference for learning about, exploring and getting your hands on semantic technology. I’ll be part of a Blue Slate team (including Scott Van Buren and Michael Delaney) who will be conducting a half-day hands-on workshop, Integrating Data Using Semantic Technology, on August 19, 2014. Our mission is to have participants use semantic technology to integrate, federate and perform analysis across several data sources.