Going Green Means More Green Going?

Thursday, August 11th, 2011Readers of my blog may be aware that I own a hybrid car, a 2007 Civic Hybrid to be precise. I have kept a record of almost every gas purchase, recording the date, accumulated mileage, gallons used, price paid as well as the calculated and claimed MPG. I thought since I now have four years of data that I could use the data to evaluate the fuel efficiency’s impact on my total cost of ownership (TCO).

I had two questions I wanted to answer: 1) did I achieve the vehicle’s advertised MPG; and is the gas savings significant versus owning a non-hybrid.

To answer the second question I needed to choose an alternate vehicle to represent the non-hybrid. I thought a good non-hybrid to compare would be the 2007 Civic EX since the features are similar to my car, other than the hybrid engine.

Some caveats, I am not including service visits, new tires or the time value of money in my TCO calculations.

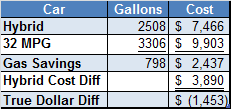

First some basic statistics. I have driven my car a little over 105,500 miles at this point. I have used about 2,508 gallons of gas costing me $7,466 over the last four years. I have had to fill up the car about 290 times. My mileage over the lifetime of the car has averaged 42 MPG which matches the expected MPG from the original sticker. Question 1 answered – advertised MPG achieved.

To explore question 2, I needed an average MPG for the EX. Since traditional cars have different city and highway MPG I had to choose a value that made sense based on my driving, yet be conservative enough to give me a meaningful result. The 2007 Civic EX had an advertised MPG of 30 city and 38 highway. I do significantly more highway than city driving, but thought I’d be really conservative and choose 32 MPG for my comparison.

With that assumption in place, I can calculate the gas consumption I would have experienced with the EX. Over the 105,500 miles I would have used about 3,306 gallons of gas costing about $9,903.

What this means is that if I had purchased the EX in 2007 instead of the Hybrid I would have used about 798 more gallons of gas costing me an additional $2,437 over that time period. That is good to know, both in terms of my reduced carbon footprint and fuel cost savings.

However, there is a cost difference between the two vehicle purchase prices. The Hybrid MSRP was $22,600 while the EX was $18,710. The Hybrid cost me $3,890 more to purchase.

So over the four years I’ve owned the car, I’m actually currently behind by $1,453 over purchasing the EX (again not considering the time value of money, which would make it worse). I will need to keep the car for several more years to break even, and in reality it may not be possible to ever break even if I start including the time value factor. Question 2 answered and it isn’t such good news.

My conclusion is that purchasing a hybrid is not a financially smart choice. I also wonder if it is even an environmentally sound one given the chemicals involved in manufacturing the battery. Maybe the environment comes out ahead or maybe not. I think it is unfortunate that the equation for the consumer doesn’t even hit break even when trying to do the right thing.

The semantic technology concepts that comprise what is generally called the semantic web involve paradigm shifts in the ways that we represent data, organize information and compute results. Such shifts create opportunities and present challenges. The opportunities include easier correlation of decentralized information, flexible data relationships and reduced data storage entropy. The challenges include new data management technology, new syntaxes, and a new separation of data and its relationships.

The semantic technology concepts that comprise what is generally called the semantic web involve paradigm shifts in the ways that we represent data, organize information and compute results. Such shifts create opportunities and present challenges. The opportunities include easier correlation of decentralized information, flexible data relationships and reduced data storage entropy. The challenges include new data management technology, new syntaxes, and a new separation of data and its relationships.